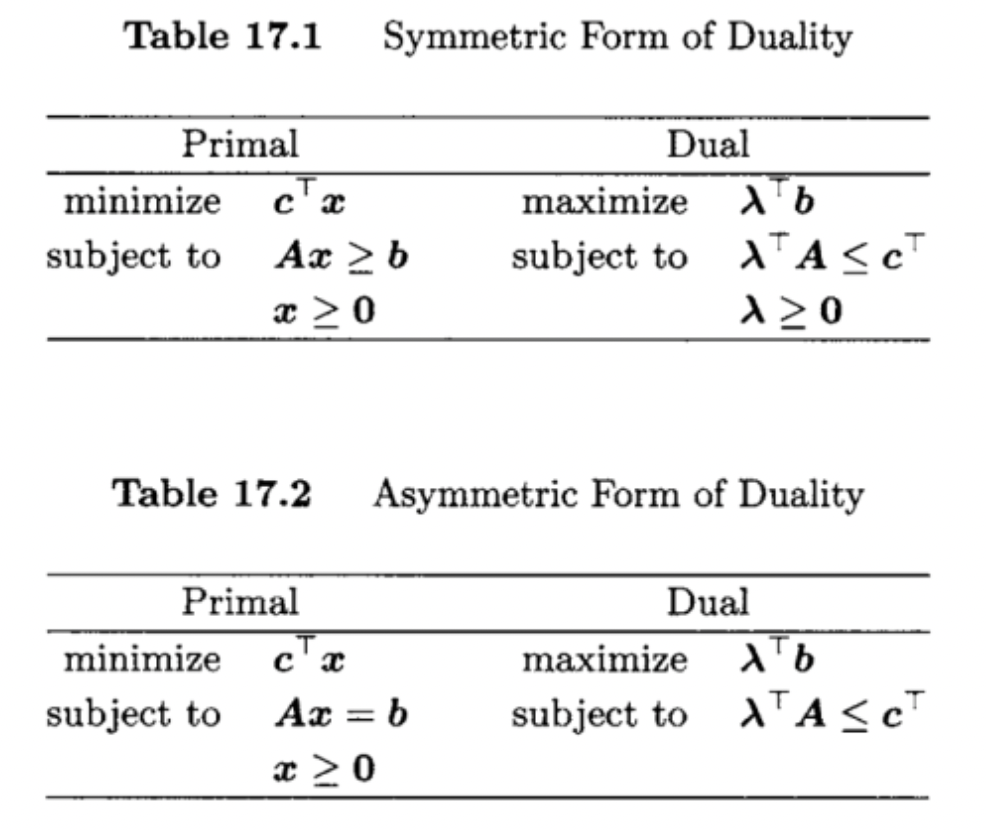

Suppose that we are given a linear programming problem of the form

minimize subject to c⊤xAx≥bx≥0. We refer to the above as the primal problem. We define the corresponding dual problem as

maximize subject to λ⊤bλ⊤A≤c⊤λ≥0. We refer to the variable λ∈Rm as the dual vector. Note that the cost vector c in the primal has moved to the constraints in the dual. The vector b on the righthand side of Ax≥b becomes part of the cost in the dual. Thus, the roles of b and c are reversed. The form of duality defined above is called the symmetric form of duality.

Note that the dual of the dual problem is the primal problem.

In summary:

Properties of Dual Problems

Lemma 1 (Weak Duality Lemma)

Suppose that x and λ are feasible solutions to primal and dual LP problems, respectively (either in the symmetric or asymmetric form). Then, c⊤x≥λ⊤b.

Proof

We prove this lemma only for the asymmetric form of duality. The proof for the symmetric form involves only a slight modification.

Because x and λ are feasible, we have Ax=b,x≥0, and λ⊤A≤c⊤. Postmultiplying both sides of the inequality λ⊤A≤c⊤ by x≥0 yields λ⊤Ax≤c⊤ x. But Ax=b, hence λ⊤b≤c⊤x.

The weak duality lemma states that a feasible solution to either problem yields a bound on the optimal cost of the other problem. The cost in the dual is never above the cost in the primal. In particular, the optimal cost of the dual is less than or equal to the optimal cost of the primal, that is, "maximum ≤ minimum." Hence, if the cost of one of the problems is unbounded, then the other problem has no feasible solution. In other words, if "minimum =−∞ " or "maximum = +∞," then the feasible set in the other problem must be empty.

Theorem 1

Suppose that x0 and λ0 are feasible solutions to the primal and dual, respectively (either in symmetric or asymmetric form). If c⊤x0=λ0⊤b, then x0 and λ0 are optimal solutions to their respective problems.

Proof

Let x be an arbitrary feasible solution to the primal problem. Because λ0 is a feasible solution to the dual, by the weak duality lemma, c⊤x≥λ0⊤b. So, if c⊤x0=λ0⊤b, then c⊤x0=λ0⊤b≤c⊤x. Hence, x0 is optimal for the primal.

On the other hand, let λ be an arbitrary feasible solution to the dual problem. Because x0 is a feasible solution to the primal, by the weak duality lemma, c⊤x0≥λ⊤b. Therefore, if c⊤x0=λ0⊤b, then λ⊤b≤c⊤x0=λ0⊤b. Hence, λ0 is optimal for the dual.

We can interpret Theorem 1 as follows. The primal seeks to minimize its cost, and the dual seeks to maximize its cost. Because the weak duality lemma states that "maximum ≤ minimum," each problem "seeks to reach the other." When their costs are equal for a pair of feasible solutions, both solutions are optimal, and we have “maximum = minimum.”

It turns out that the converse of Theorem 1 is also true, that is, “maximum = minimum” always holds. In fact, we can prove an even stronger result, known as the duality theorem.

Theorem 2 (Duality Theorem)

If the primal problem (either in symmetric or asymmetric form) has an optimal solution, then so does the dual, and the optimal values of their respective objective functions are equal.

Proof

We first prove the result for the asymmetric form of duality. Assume that the primal has an optimal solution. Then, by the fundamental theorem of LP, there exists an optimal basic feasible solution. As is our usual notation, let B be the matrix of the corresponding m basic columns, D the matrix of the n−m non-basic columns, cB the vector of elements of c corresponding to basic variables, cD the vector of elements of c corresponding to nonbasic variables, and rD the vector of reduced cost coefficients. Then, by the Theorem presented in the LP section,

rD⊤=cD⊤−cB⊤B−1D≥0⊤. Hence,

cB⊤B−1D≤cD⊤. Define

λ⊤=cB⊤B−1. Then,

cB⊤B−1D=λ⊤D≤cD⊤ We claim that λ is a feasible solution to the dual. To see this, assume for convenience (and without loss of generality) that the basic columns are the first m columns of A. Then,

λ⊤A=λ⊤[B,D]=[cB⊤,λ⊤D]≤[cB⊤,cD⊤]=c⊤. Hence, λ⊤A≤c⊤ and thus λ⊤=cBB−1 is feasible.

We claim that λ is also an optimal feasible solution to the dual. To see this, note that

λ⊤b=cB⊤B−1b=cB⊤xB. Thus, by Theorem 1, λ is optimal.

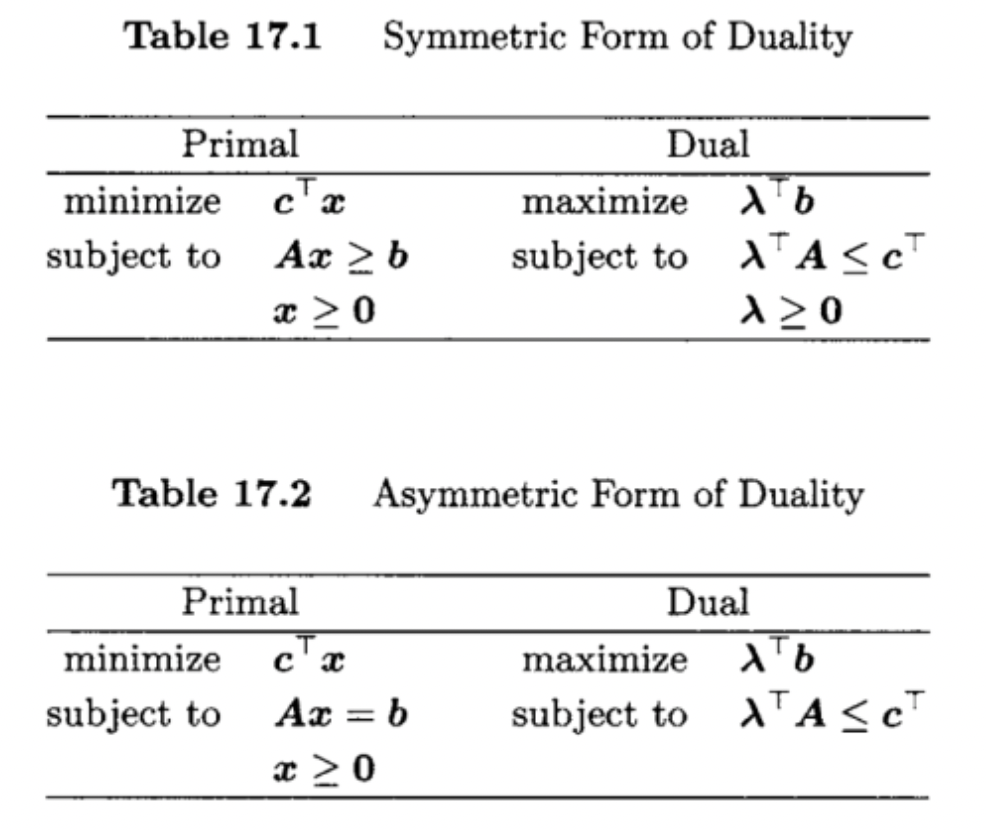

We now prove the symmetric case. First, we convert the primal problem for the symmetric form into the equivalent standard form by adding surplus variables:

minimizesubject to [c⊤,0⊤][xy][A,−I][xy]=b[xy]≥0. Note that x is optimal for the original primal problem if and only if [x⊤,(Ax−b) ⊤]⊤ is optimal for the primal in standard form. The dual to the primal in standard form is equivalent to the dual to the original primal in symmetric form. Therefore, the result above for the asymmetric case applies also to the symmetric case.

This completes the proof.

Theorem 3 (Complementary Slackness Condition)

The feasible solutions x and λ to a dual pair of problems (either in symmetric or asymmetric form) are optimal if and only if:

- (c⊤−λ⊤A)x=0.

- λ⊤(Ax−b)=0.

Proof

We first prove the result for the asymmetric case. Note that condition 2 holds trivially for this case. Therefore, we consider only condition 1.

⇒ : If the two solutions are optimal, then by Theorem 2, c⊤x=λ⊤b. Because Ax=b, we also have (c⊤−λ⊤A)x=0.

⇐ If (c⊤−λ⊤A)x=0, then c⊤x=λ⊤Ax=λ⊤b. Therefore, by Theorem 1, x and λ are optimal.

We now prove the result for the symmetric case.

⇒ We first show condition 1. If the two solutions are optimal, then by Theorem 2, c⊤x=λ⊤b. Because Ax≥b and λ≥0, we have

(c⊤−λ⊤A)x=c⊤x−λ⊤Ax=λ⊤b−λ⊤Ax=λ⊤(b−Ax)≤0. On the other hand, since λ⊤A≤c⊤ and x≥0, we have (c⊤−λ⊤A)x≥0. Hence, (c⊤−λ⊤A)x=0. To show condition 2, note that since Ax≥b and λ≥0, we have λ⊤ ( Ax−b)≥0. On the other hand, since λ⊤A≤c⊤ and x≥0, we have λ⊤(Ax−b)= (λ⊤A−c⊤)x≤0.

⇐ Combining conditions 1 and 2, we get c⊤x=λ⊤Ax=λ⊤b. Hence, by Theorem 1, x and λ are optimal.

Note that if x and λ are feasible solutions for the dual pair of problems, we can write condition 1 , that is, (c⊤−λ⊤A)x=0, as " xi>0 implies that λai=ci,i=1, …,n," that is, for any component of x that is positive, the corresponding constraint for the dual must be an equality at λ. Also, observe that the statement " xi>0 implies that λ⊤ai=ci " is equivalent to "λ⊤ai<ci implies that xi=0." A similar representation can be written for condition 2 .

Consider the asymmetric form of duality. Recall that for the case of an optimal basic feasible solution x,r⊤=c⊤−λ⊤A is the vector of reduced cost coefficients. Therefore, in this case, the complementary slackness condition can be written as r⊤x=0.